A new mandate for businesses is to keep pace with ever-larger language models, overflowing amounts of data, and the need to use that data for real-time insights—all in a way that’s sustainable and cost-effective.

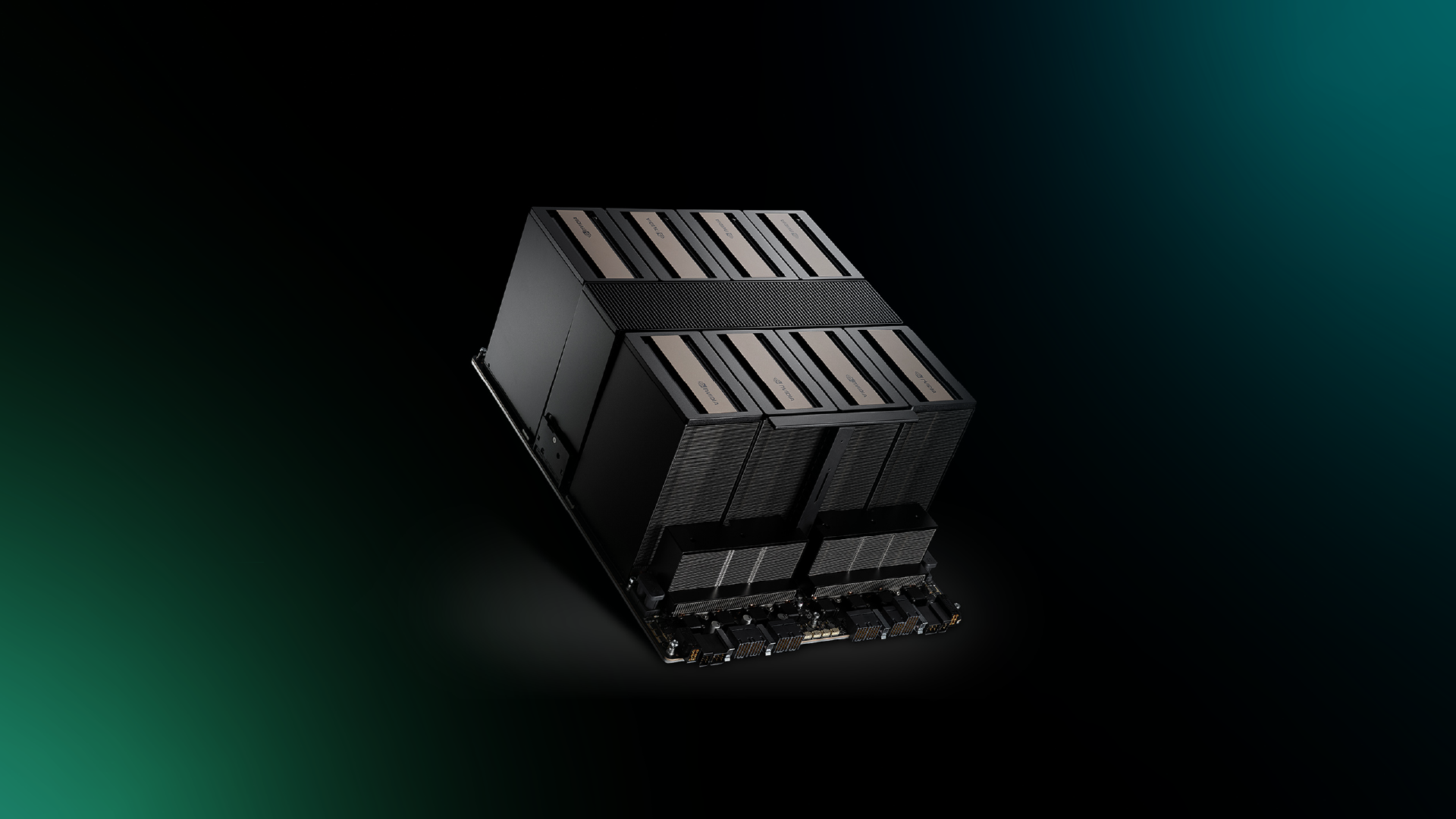

Meeting those needs has become easier with Cirrascale’s AI Innovation Cloud, which now offers the NVIDIA HGX™ B200 platform as part of our NVIDIA GPU Cloud. The addition marks a pivotal leap forward for enterprises, researchers, and AI innovators looking to tap into the next generation of generative AI (GenAI), large language models (LLMs), and high-performance computing (HPC) workloads.

Taking Real-Time Large Language Model Inference to New Levels

The NVIDIA HGX B200 is powered by Blackwell GPUs, bringing unprecedented acceleration to real-time inference for LLMs. Thanks to its advanced second-generation Transformer Engine and new FP4 precision Tensor Cores, the HGX B200 delivers up to 15X faster inference for multi-trillion-parameter models, such as GPT-MoE-1.8T, compared to the previous Hopper generation.

Even better, this accelerated performance comes with 12X lower cost and 12X less energy consumption, making real-time, resource-intensive AI inference not just possible, but practical at scale.

AI Training for the Next Generation of Models

AI model training demands both speed and scalability. The HGX B200’s Blackwell architecture introduces FP8 precision and a faster Transformer Engine, enabling up to 3X faster training for large language models. The platform’s fifth-generation NVLink interconnect provides 1.8TB/s of GPU-to-GPU bandwidth, ensuring seamless scaling across large-scale GPU clusters. Combined with InfiniBand networking and Magnum IO software, enterprises can efficiently train he most complex models without bottlenecks.

When it comes to data analytics—the backbone of modern AI workflows—the HGX B200’sdedicated decompression engine accelerates the entire database query pipeline, supporting the latest compression formats, including LZ4, Snappy, and Deflate. This allows you to extract insights at record speed with 6X faster query performance than CPUs and 2X faster than the H100 for the most demanding data analytics tasks.

Further, the HGX B200’s massive gains in performance per watt enable data centers to dramatically reduce their energy consumption and carbon footprint. For LLM inference, the platform achieves 12X greater energy efficiency and lowers operational costs by 12X compared to the previous generation, supporting enterprise performance and sustainability initiatives.

Built for Scale and Flexibility

With configurations featuring eight Blackwell GPUs, the HGX B200 delivers an extraordinary 1.4TB of GPU memory and 64TB/s of memory bandwidth. It’s an architecture purpose-built for the most demanding GenAI and data analytics workloads, empowering our customers to:

- Deploy and scale real-time, multi-trillion-parameter language models with unmatched speed and cost-efficiency.

- Train next-gen AI models faster and more efficiently, accelerating innovation.

- Turbocharge data analytics pipelines for rapid, actionable insights.

- Achieve sustainability goals through industry-leading energy efficiency.

- Leverage a future-proof platform designed for the most demanding AI workloads.

A New Era of Accelerated Computing

Cirrascale aims to provide purpose-built cloud solutions that enable innovators to push the boundaries of what’s possible with AI. The NVIDIA HGX B200, now available in our AI Innovation Cloud, sets a new standard in accelerated computing.

Ready to future-proof your AI workflows? Contact us today to learn more about the power of NVIDIA HGX B200 and Cirrascale’s AI Innovation Cloud.

.jpg)