As developers continue to build AI applications, workflows, and integrations, it’s important to consider where those inference workloads run. Qualcomm Technologies has partnered with Cirrascale to offer the Inference Cloud powered by Qualcomm, a purpose-built inference engine powered by the Qualcomm Cloud AI 100 Ultra inference cards and Qualcomm AI Suite.

Performance Where It Matters

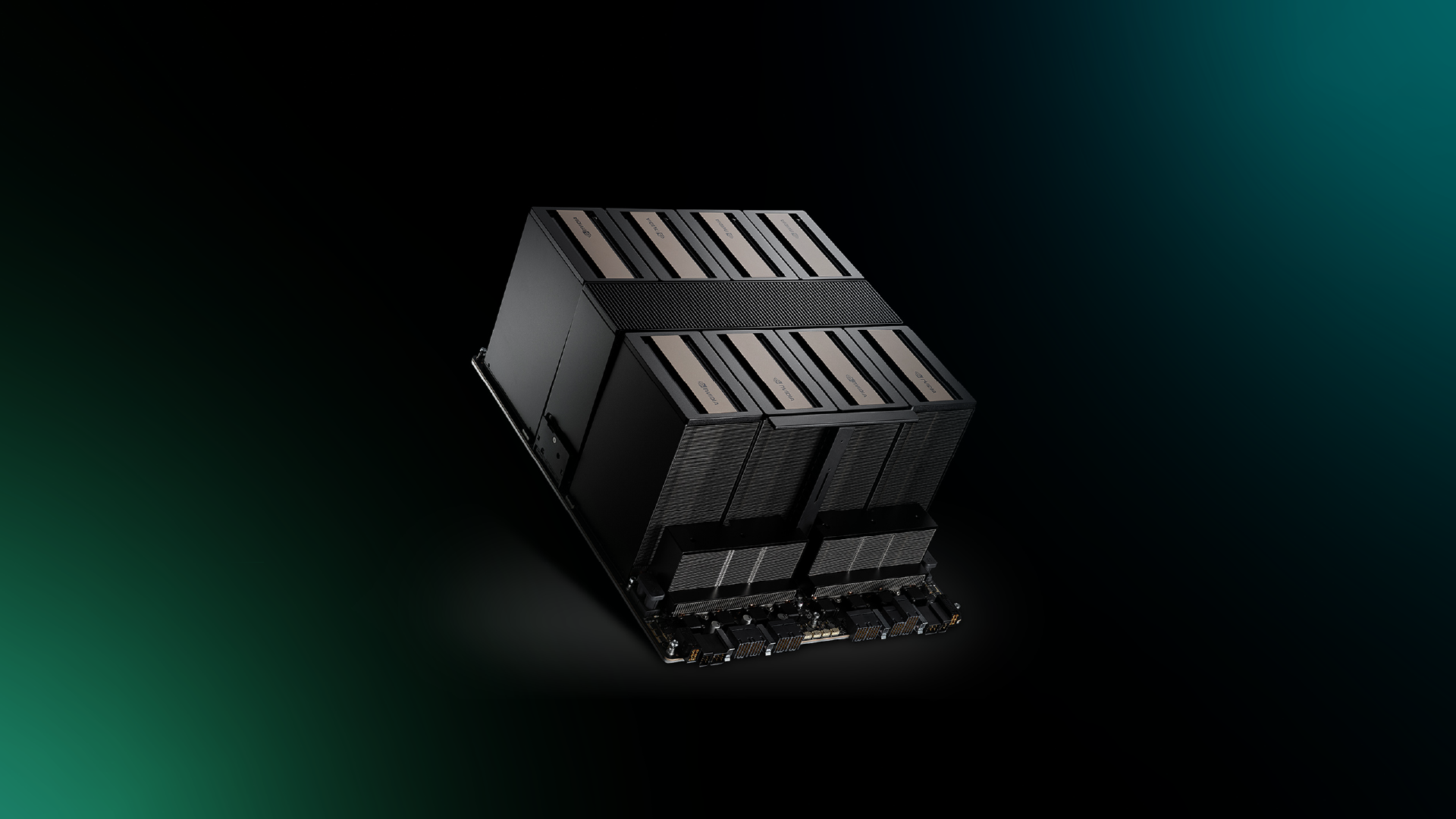

At the heart of the Inference Cloud powered by Qualcomm is the Qualcomm Cloud AI 100 Ultra hardware—an acceleration platform engineered specifically for AI workloads. This cutting-edge technology delivers what modern businesses need most: exceptional performance-per-dollar for AI inference tasks. That’s especially critical for businesses facing budget constraints at scale or those building real-time applications where latency and efficiency are paramount. Inference Cloud provides the infrastructure to make it happen efficiently and cost-effectively while preserving familiar APIs and programming models for developers through the Qualcomm AI Suite.

Build Powerful Applications with Inference Cloud

The Inference Cloud powered by Qualcomm provides a starter set of popular opensource GenAI models to choose from and will soon support user-provided models for full flexibility. While smaller models in the 8B parameter range can run on a decent developer workstation, larger models have not been so easy to work with given the hardware demands they require.

Just like other cloud workloads, once something goes to production, cost vs. benefit can become a limitation. The Inference Cloud powered by Qualcomm was designed to provide an attractive option for those AI workloads where cost effectiveness for a given speed and scale is needed.

Access Popular AI Models Through OpenAI-compatible APIs

We've made integration straightforward with OpenAI-compatible APIs. Connecting to our infrastructure and maintaining compatibility with existing codebases is as simple and seamless as updating your endpoint and API key. This allows you to quickly assess the full potential of the Qualcomm Cloud AI infrastructure.

Utilize Qualcomm’s Imagine SDK with the AI Suite inference APIs to craft unique AI experiences. Our platform makes it simple to develop applications that harness the Qualcomm Cloud AI 100 Ultra card's processing capabilities. We also share documented code samples for agents in multiple domains to provide an idea of what can be accomplished across common agentic workflows including web research, RAG, data analysis, and content creation.

A Platform Built for Developers, by Developers

The Inference Cloud powered by Qualcomm is more than just hardware. It’s your gateway to a comprehensive AI innovation stack powered by the Qualcomm Imagine SDK.

- Different modalities: LLMs, image generation, embedding, transcription, translation, and more

- Framework Compatibility: Build with popular frameworks including LangChain, CrewAI, AutoGen, and RAG with ChromaDB

- Comprehensive Documentation: Access detailed SDK docs and API references with examples for basic usage, tool calling, and more

- Advanced Features: Take advantage of specialized capabilities like LiteLLM, Guarded LLM, and robust logging

- Flexible Client Options: Choose between synchronous and asynchronous clients based on your application needs

Getting Started: Simple and Seamless Integration

Qualcomm’s Imagine SDK makes it incredibly easy to start building. Here's a basic example of how you can interact with the platform in Python:

from imagine import ChatMessage, ImagineClient

# Initialize the client

client = ImagineClient()

# Create a chat conversation

chat_response = client.chat(

messages=[ChatMessage(role="user", content="Weigh the strengths and

weaknesses of a revenue share business model and offer scenarios when it

would be favorable and when not.

.")],

model="Llama-3.1-8B",)

# Print the response

print(chat_response.first_content)

This simple code will generate a comprehensive response about the pros and cons of a revenue-share business model, demonstrating the power and simplicity of the platform. In this example, the API endpoint and key are stored in environment variables.

Get Started Today

Visit the developer portal to access documentation, tutorials, and ready-to-use agent examples. Start building today and experience the power of accelerated AI computing with the Inference Cloud powered by Qualcomm—where performance meets possibility.

Qualcomm branded products are products of Qualcomm Technologies, Inc. and/or its subsidiaries.